Use `sync.Pool` to reduce memory consumption

Identifying the problem

Our service is like a excel document datastore.

and we use xorm as ORM framework,

Everytime we need to get data from DB, we call session.Find(&[]Author{}) with the slice of table beans,

but this have a problem,

- Memory allocation is very high

So every time lots of clients try to download excel file, the memory consumption is too high, and downloadling excel file takes too long to complete.

Find the root cause with pprof

I wrote a benchmark and by leveraging GO’s pprof profiling tool, we can easily check out the flamegraph using some tool like pyroscope.

Here’s the result we got:

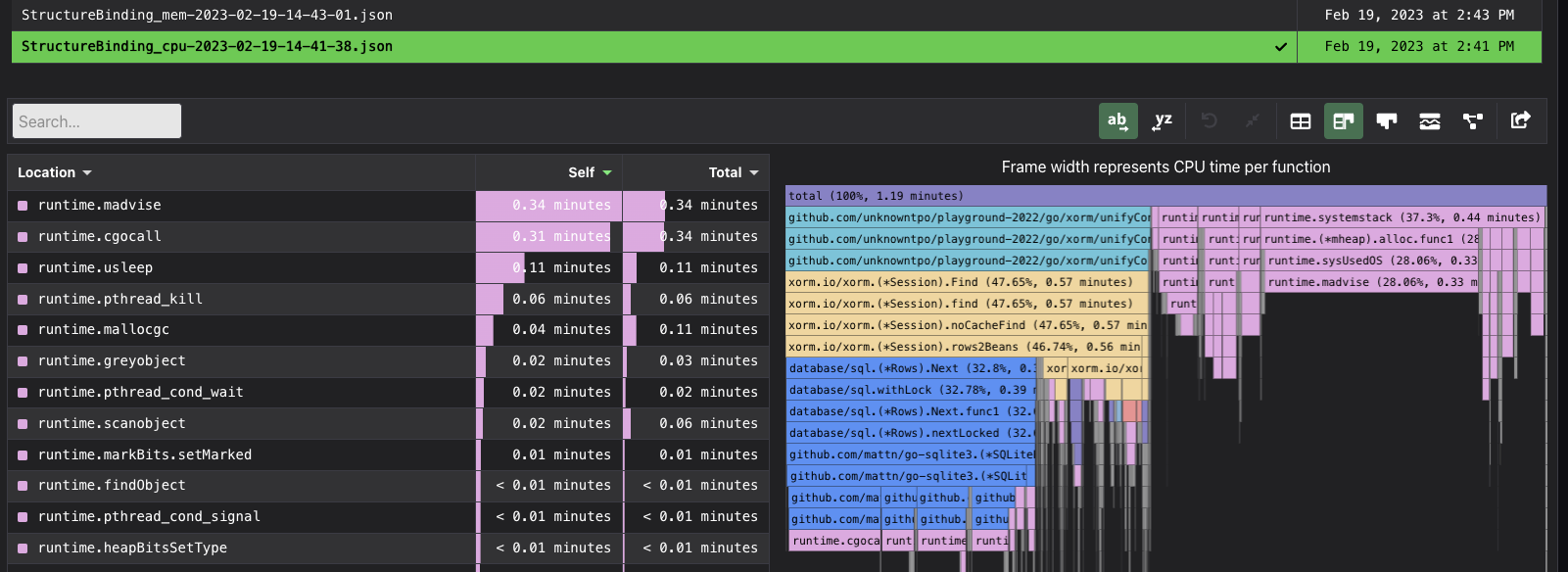

CPU

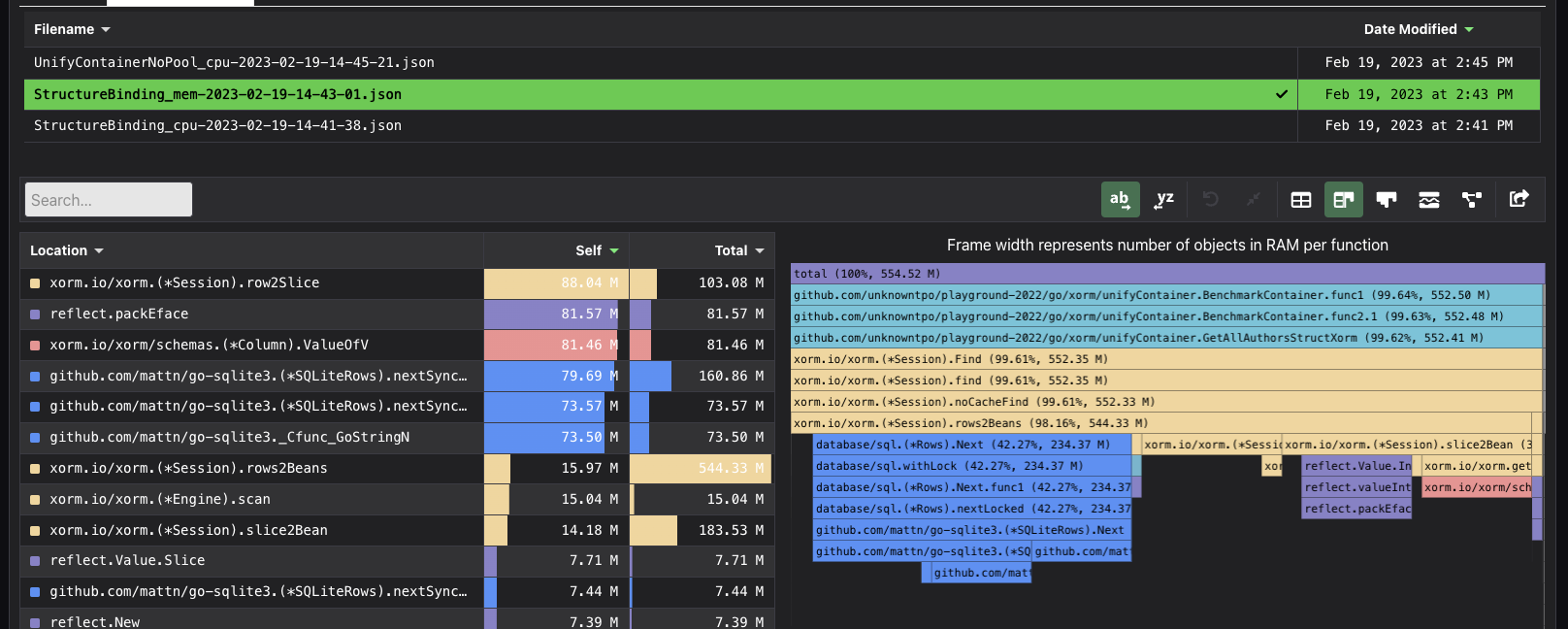

Memory Allocation

We can see that under the frame of (*Session).rows2Beans,

except the function underneath xorm framework that we can’t touch,

(*Session).slice2Bean took a lot of CPU time and had lot of

memory allocation.

The problem of Structure Binding

After took a look at the code in noCacheFind, I found that if we use bean (a structure with information about db schema definition) to hold the result set, xorm will call session.rows2Beans to convert rows into tableBean.

In sesson.rows2Beans(), it will:

- convert rows to slices (

[]any) by callingsession.row2Slice() - convert

[]anyto[]beanby callingsession.slice2Bean()

And this tooks a lot of time.

But I also found that if we use [][]string to hold the result set,

after getting xorm.Rows (underlying data structure is database/sql.Rows), noCacheFind() will call rows.Scan for each row, so simple ! This is the chance we can make session.Find() much faster.

Step 1: Use [][]string to hold the data

Based on the assumption, we can use [][]string to reduce the cost of structure binding, you can see the benchmark below unifyContainerNoPool

.

Step 2: Use sync.Pool to reduce memory allocation

But it still need huge amount of memory allocation for every []string and every [][]string, let’s see how we can reduce this cost.

The solution I came out is very simple, if memory allocation is time-consuming,

why don’t we reuse the data structure in memory ? In this case,

we’re using [][]string

|

|

Experiment:

To demonstrate the improvement of our code, I design a simple benchmark,

There are three ways we can get data from database.

- Use

[]Authorto hold the data (Structure Binding) - Use

[][]stringto hold the data (Unify Container withoutsync.Pool) - Use

[][]stringto hold the data, and use sync.Pool to reuse[][]string(Unify Container with sync.Pool)

For row number between 1000 and 8000

to demonstrate the benefit of sync.Pool,

we use runtime.NumCPU() worker to perform runtime.NumCPU()*4 jobs, every job gets all rows from the author table

|

|

The result shows that the number of allocation per operation is quite different,

The Structure Binding Method needs the largest number of allocations, and the speed is way slower that other two methods. When row number goes high, performance get worse very quickly.

The Method of using [][]string with sync.Pool on the other hand,

needs smallest number of memory allocation,

and compare to the one without sync.Pool, and because memory allocation takes significant amount of time, it’s still faster.

Here’s the plot:

I put my code at the repo, please go check it out!